Ceph Management Web User Interface (UVS Manager)

UVS Manager, Unified Virtual Storage Manager

Unified Virtual Storage (UVS) is a software package that integrates Linux operating system, Ceph distributed storage software, management console, and the web-based user interface(UVS manager) for Ambedded Ceph appliance Mars.

The UVS manager automates the most commonly used Ceph management functions. With the UVS manager, we shorten your learning curve for deploying and operating the Ceph cluster.

The UniVirStor Ceph Management

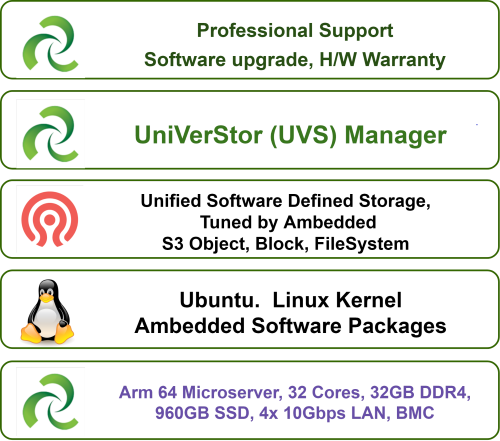

Ambedded provides users with a turn-key solution for Ceph applications. The UniVerStor (UVS) package pre-installs all software components required to run ceph on the Ambedded Mars server platforms. The major software components are Linux operating system, Ceph software, and UVS Manager.

Users can use both Ceph command-line interface and UVS Manager to manage the Ceph Storage. However, managing Ceph by command line is sophisticated and may cause human mistakes. Ambedded UVS manager automates most of the commonly used processes. The UVS management software enables users to deploy, manage, update and monitor a Ceph cluster quickly and easily.

Performance & Stability Tuning

Making the Ceph and Linux work stably with better performance on the server requires professional tuning on Ceph and Linux configurations. Ambedded Ceph appliances are carefully tuned with ARM microservers to ensure that they perform stably and well.

Key Features

- Preinstall the software packages required to deploy a stable Ceph cluster quickly.

- Parallel network configuration saves time for deployment.

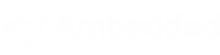

- Users can visually define the CRUSH map according to the infrastructure hierarchy by creating buckets, renaming bucket types, moving buckets, and defining CRUSH rules.

- Create or configure the NTP server and push configurations to hosts for cluster time synchronization.

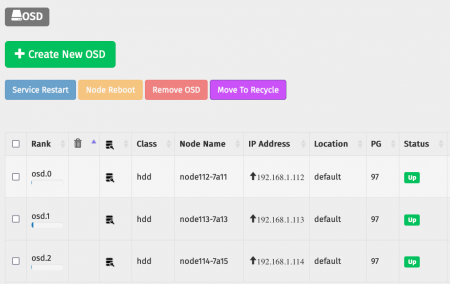

Ceph OSD and Monitor

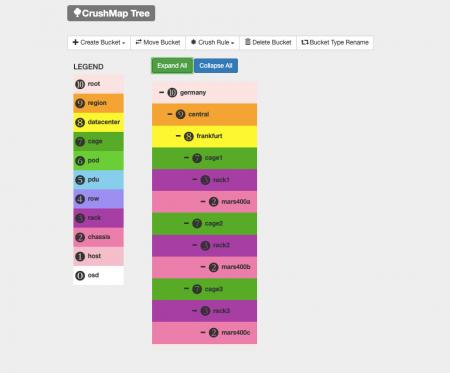

- Monitor: create, SSD drive SMART, and status

- OSD: create, encryption, service restart, trash, and delete, drive SMART and status

- Locating data drive and bluestore SSD location in chassis by flashing LEDs

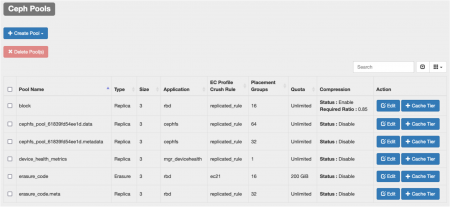

Pools

- Creating replica and erasure code pools.

- Editing an existing pool

- Pool data compression

- Adding a fast cache tier on top of a slow pool

- Pool mirroring status

Storage Services

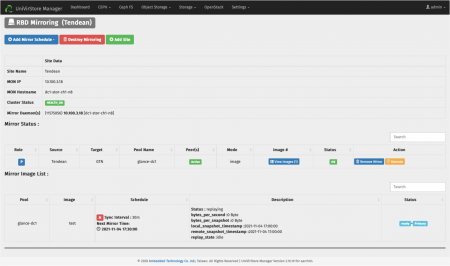

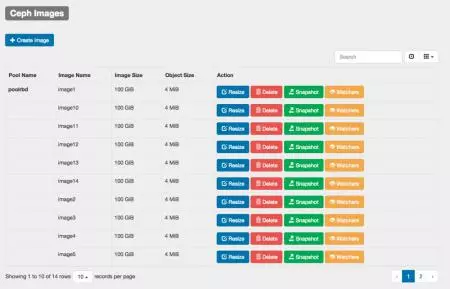

- Manage RBD images, Snapshot, clone & flatten, Mirroring.

- RBD Mirroring: Asyncronize RBD images to another Ceph cluster

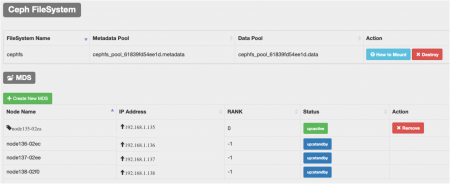

- Managing CephFS. Create / Delete MDS.

- Managing MDS failover and failback

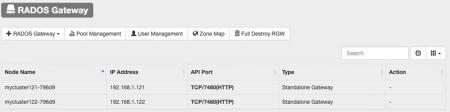

- Deploying RGW for S3 and SWIFT object storage

- Configuring and setting up Multi-Site for object storage

- Creating object storage user secret keys

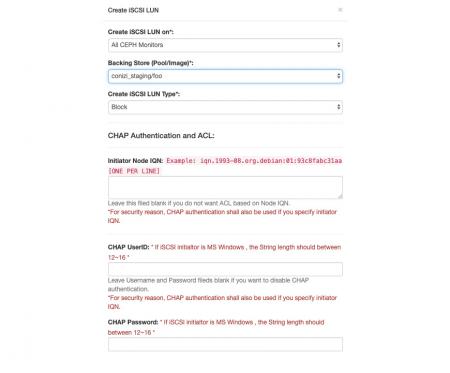

- Deploy iSCSI gateways with multi-path support

- Creating and managing iSCSI LUNs

- Deploy NFS and SMB on gateways

- View OSD and pool usage

- Dashboard monitors cluster and daemon health in real-time.

- Prometheus Ceph and Node exporter control and configuration

- Email notification

- Multisite RADOS gateway: Deploy Active -Active object storage service. Replicate data asynchronously between multiple ceph clusters.

- RBD Mirroring: Deploy rbd-mirror service to bidirectionally backup RBD images to another Ceph cluster. Managing peerings, snapshot schedules, promotions, and demotion operations.

- Ceph users: Configure CephX key and user capability.

- Manage the UVS manager users.

- Notifications: Configure alert notification schedule and email.

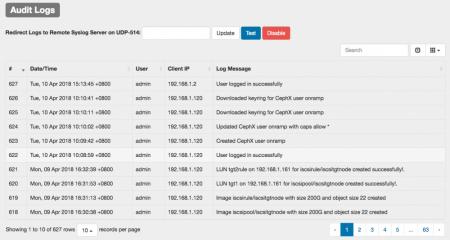

- Audit Log: record and view users’ operations on the UVS manager .

- Log management: Review, collect and download logs.

- Monitoring: Users can manage the Prometheus matrix and exporters.

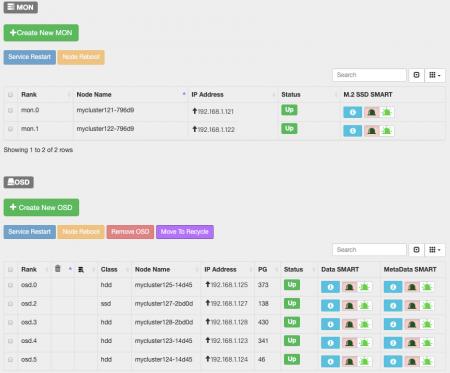

The UVS software updater allows users to update the software packages without disrupting the storage services. The possible packages may include user interfaces, Ceph, Linux kernel, and Linux root file system.

The UVS manager is not just a user interface software. The UVS manager also includes the knowledge Ambedded accumulated from supporting, tuning, and testing Ceph for years. Furthermore, Ambedded’s knowledge is embedded in the UVS manager through automation.

Applications

- OpenStack backend storage

- Kubernetes cluster backend storage

- VMWare / Windows Hyper-V virtualization

- Enterprise storage

- S3 / Swift object storage.

- IP Camera/ CCTV Surveillance, Video Management System (VMS)

- Scale-out NAS.

- Video

- Mars 400 Ceph Storage with UVS manager

- Download

Ambedded Ceph SW Solution Package (UniVirStor Package)

UniVirStor, the complete ceph storage software solution, the all-in-one software stack includes optimized kernel & OS, ceph storage software, Web GUI manager,...

DownloadAmbedded Ceph storage solutions

Starting with cost-effective Ceph storage designed for high-capacity needs (Mars 412) and extending to performance-focused Ceph clusters featuring NVMe...

Download

Ceph Management Web User Interface (UVS Manager) | Ceph Storage Solutions; Ceph Appliances & Software|Ambedded

Founded in Taiwan in 2013, Ambedded Technology Co., Ltd. is a leading provider of block, file, and object storage solutions based on Ceph software-defined storage. We specialize in delivering high-efficiency, scalable storage systems for data centers, enterprises, and research institutions. Our offerings include Ceph Management Web User Interface (UVS Manager), Ceph-based storage appliances, server integration, storage optimization, and cost-effective Ceph deployment with simplified management.

Ambedded provides turnkey Ceph storage appliances and full-stack Ceph software solutions tailored for B2B organizations. Our Ceph storage platform supports unified block, file (NFS, SMB, CephFS), and S3-compatible object storage, reducing total cost of ownership (TCO) while improving reliability and scalability. With integrated Ceph tuning, intuitive web UI, and automation tools, we help customers achieve high-performance storage for AI, HPC, and cloud workloads.

With over 20 years of experience in enterprise IT and more than a decade in Ceph storage deployment, Ambedded has delivered 200+ successful projects globally. We offer expert consulting, cluster design, deployment support, and ongoing maintenance. Our commitment to professional Ceph support and seamless integration ensures that customers get the most from their Ceph-based storage infrastructure — at scale, with speed, and within budget.