Use Mars 400 Ceph for Disk to Disk Backup, RGW Multisite Disaster Recovery

What are the concerns on most IT users when they are planning their enterprise disk to disk backup system?

Scalability: The storage size won't remain at a fixed size, sure for the backup solution capacity. Most of the users consider having an on-demand scale-out storage solution.

Fault-tolerance & Self-managing: The solution shall remain to be fault-tolerant, without interventions or oversight on it.

Simple & Always On: The backup solution shall increase flexibility and reduce overhead, keep the data available within a straightforward approach.

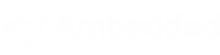

The Ceph storage backup scenario:

A backup server receives data from application servers running on the enterprise network. The backup server organizes data, running deduplication or compression, then saves the data into the Ceph storage cluster.

The Ceph cluster could appear to the backup server as a block device, filesystem, or object gateway, depending on which protocol interface the backup servers require to connect with.

If the backup servers run on Linux OS and require a shared file system, CephFS would be a perfect candidate. If each backup server writes to its data volume, the user can use RBD or iSCSI. RBD is preferred if the backup server uses Linux as a Linux client using RBD to write directly to each storage device in the Ceph cluster.

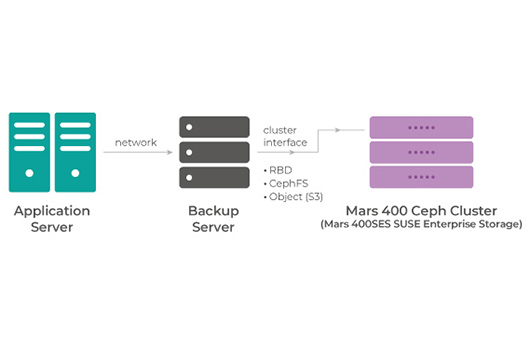

Here we will more focus on the newest S3 protocol coming up to the most backup application as this could integrate with Ceph RGW multi-site support to achieve the disk to disk backup with Disaster recovery functionality in a simple ZONE Group setup.

Ceph supports active-active multi site cluster installation to make data available on multiple different locations, with this feature, the customer could deploy on-premise S3 cloud storage on different locations, and clusters could backup to each other in a simple way.

Customers can use UVS manager to configure multisite Rados Gateway, also to set up the ZONE group on UVS.

When the Master ZONE failed, users could use the UVS manager to promote the secondary zone as the master ZONE and redirect the application service to the endpoints of Zone 2, so the service and business application could keep continuing without interrupts.

This on-premise S3 could be integrated with the backup servers, so the customer could have the same backup cloud storage on multiple locations (under the regulation rule or company governance). These features also could provide S3 storage directly to the application service.

An Example of Insurance Customers:

Customer wants to use cloud storage to allow their insurance salesman to run their app, to upload and download the policy information, this service could not be built on public cloud due to the regulation constraints, on the same time, the data shall be kept in 2 different location at least. Under this circumstance, customers is using S3 via Mars 400 ceph storage on 2 different datacenter location, to enable their cloud service to the salesman. The data on bucket in Cluster 1, they are actively synced to the bucket in the cluster 2, which ensure the high availability of the data, no worry if any one of the cluster got an unexpected accident.

- Related Products

Mars 400PRO Ceph storage appliance

Mars 400PRO

Mars 400 Ceph Appliance is designed to meet high capacity cloud-native data storage needs. It utilizes HDD to benefit from low cost per TB. Mars 400 provides...

Details