Veeam backup and archive to work with Ceph

This case study explains how to use the Mars 400 ceph storage appliance as the backup repositories of Veeam backup and replication.

Ceph supports object storage, block storage, and the POSIX file system all in one cluster. According to backup requirements, customers can select different storage protocols to support the needs of various storage backup strategies.

In this article, we use Ceph block storage (Ceph RBD) and Ceph file system (Cephfs) as the backup repositories and compare their backup job durations of backing up virtual machines from Hyper-V and VMWare.

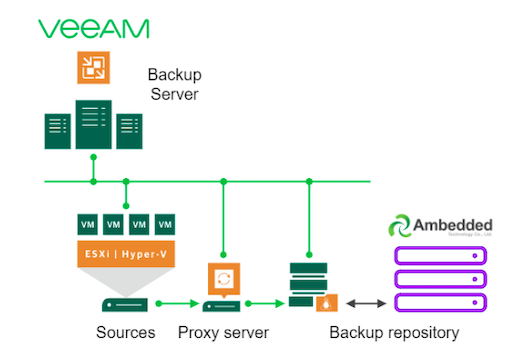

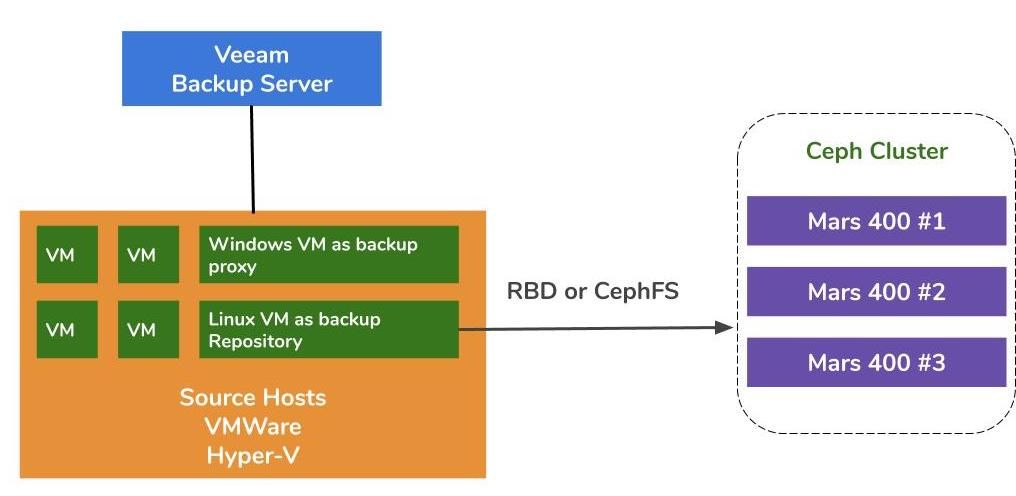

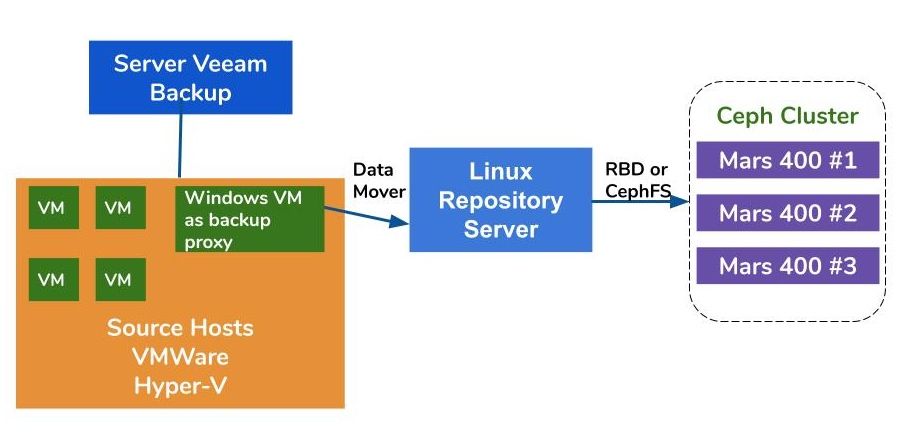

Backup Solution Architecture by using Veeam and Ceph

The architecture of backing up virtual machines on VMWare and Hyper-V are similar. Veeam uses data movers to transfer data from source hosts to backup repositories. The data movers run on the proxy server and the repository server. To use Ceph as the backend storage of a backup repository, you can mount RBD or CephFS on a Linux physical server or virtual machine as the repository server.

If the proxy and repository servers are virtual machines inside the hypervisor cluster, you can get the benefit of network-free high-speed data transporting between VM disk, proxy server, and the repository server. The best configuration of a large hypervisor cluster is to deploy one proxy server VM and one repository server VM on each VMWare host. Otherwise, you can deploy one backup proxy VM on every VMWare host and one off-host repository host to remove the workload from your production VMWare.

There are three ways to use the Ambedded Ceph appliance as the repositories for Veeam Backup and Replication. CephFS and RBD block devices can be used as the on-premises backup repository. The S3 object storage can be used as the capacity tier for a remote location.

For how to set up the Ceph RBD block device and the CephFS file system as the backup repository of Veeam for backing up virtual machines and files, you can find the details on the white paper in the end of this page.

Testing Environment

- Three Mars 400 with 3x monitors, 20 OSDs, and 1x MDS (metadata server)

- Each Ceph daemon runs on one dual-core Arm A72 microserver

- Operating System: CentOS 7

- Ceph software: Nautilus 14.2.9 Arm64

- Network: 4x 10Gb network per Mars 400

Veeam Backup & Replication 10, Version: 10.0.1.4854

Veeam Backup Server

- CPU: Intel Xeon E5-2630 2.3GHz DUAL

- DRAM: 64GB

- Network: 2x 10Gb sfp+ bonding

- Disk: 1TB for system, 256GB SATA3 SSD for volume

- Windows Server 2019

Veeam Proxy Server

- collocate with Veeam Backup Server

Repository Server

- Virtual Machine

◇ CPU: 4 cores 2.3GHz

◇ DRAM: 8GB

◇ Network: bridge

◇ Disk: 50GB virtual disk

◇ OS: CentOS 7.8.2003

- Baremetal Server

◇ CPU: Intel Xeon X5650 2.67GHz DUAL

◇ DRAM: 48GB

◇ Network: 2-port 10Gb sfp+ bonding

◇ Disk: 1TB for system

◇ OS: CentOS 7.6.1810

Hyper-V Host

◇ CPU: Intel Xeon E5-2630 2.3GHz DUAL

◇ DRAM: 64GB

◇ Network: 2-port 10Gb sfp+ bonding

◇ Disk: 1TB for system

◇ Windows Server 2019

VMWare Host

◇ CPU: Intel Xeon E5-2630 2.3GHz DUAL

◇ DRAM: 64GB

◇ Network: 2-port 10Gb sfp+ bonding

◇ Disk: 1TB for system

◇ ESXi 6.5

Network: 10GbE switch

Benchmark on Various Setups

To benchmark the backup performance of various backup repositories, we set up tests with different backup repositories and three backup sources.

Backup sources we use for the tests are a SATA SSD based volume on a server, a Windows VM of Hyper-V, and a CentOS 7 VM and a Windows VM of VMWare.

(1)Backup a volume on a SSD Drive

Table 1. Backup a volume from a server with a SATA SSD.

| Disk Size (Data processed) | 237.9GB |

| Data Read from the source | 200.1GB |

| Data Transfered to Ceph after Deduplication and Compression | 69.7GB |

| Deduplication | 1.3X |

| Compression | 2.7X |

Table 2.

| Backup Repository | Duration (sec) | Source (%) | Proxy (%) | Network (%) | Target (%) | Processing Rate (MB/s) | Average Data Write Rate (MB/s) |

| Linux VM, RBD-replica 3 | 646 | 83 | 33 | 84 | 21 | 554 | 110 |

| Linux VM, CephFS-replica 3 | 521 | 97 | 25 | 31 | 5 | 564 | 137 |

| Linux VM, RBD, EC | 645 | 82 | 34 | 83 | 24 | 554 | 111 |

| Linux VM, CephFS, EC | 536 | 97 | 26 | 27 | 4 | 564 | 133 |

| Linux Server, RBD, EC | 526 | 97 | 21 | 16 | 3 | 561 | 136 |

Note: The Average Data Write Rates are calculated by Data Transferred divided by Duration. These rates represent the workloads of the Ceph cluster in these backup jobs.

(2)Backup a Windows 10 VM on Hyper-V on HDD

In this benchmark, we backup a Hyper-V instance that is stored on a SATA hard drive. The processing rates of these jobs reach the upper limit of HDD bandwidth. We can also find the bottleneck is on the source because their loads are busy during 99% of the job duration. Ceph cluster, the target, workload from the Veeam backup jobs is light. Ceph cluster is only busy at 6% to 1% of the working time.

Compared to the previous benchmark, the processing rate of the VM backup is much lower than the SSD backup. This is mainly because the VM data is stored in a hard drive.

Table 3.

| Disk Size (HDD) | 127GB |

| Data Read from source | 37.9GB |

| Data Transfered to Ceph after Deduplication and Compression | 21.4GB |

| Deduplication | 3.3X |

| Compression | 1.8X |

Table 4. Backup a virtual machine image on SATA3 HDD

| Backup Repository | Duration (sec) | Source (%) | Proxy (%) | Network (%) | Target (%) | Processing Rate (MB/s) | Average Data Write Rate (MB/s) |

| Linux VM, RBD volume, EC | 363 | 99 | 7 | 3 | 6 | 145 | 60 |

| Linux VM, CephFS volume, EC | 377 | 99 | 7 | 2 | 1 | 142 | 58.1 |

| Linux Server, RBD volume, EC | 375 | 99 | 6 | 2 | 2 | 140 | 58.4 |

Note: The Average Data Write Rates are calculated by Data Transferred divided by Duration. These rates represent the workloads of the Ceph cluster in these backup jobs.

(3)Backup Virtual Machines on ESXi on HDD

This test backs up a CentOS 7 and a Windows 10 Virtual machines running on a HDD of VMWare ESXi 6.5 host to a repository backed by a Ceph RBD with 4+2 erasure code protection.

Table 5.

| Source | CentOS VM | Windows 10 VM |

| Disk Size (HDD) | 40GB | 32GB |

| Data Read from source | 1.8GB | 12.9GB |

| Data Transfered to Ceph after Deduplication and Compression | 966MB | 7.7GB |

| Deduplication | 22.1X | 2.5X |

| Compression | 1.9X | 1.7X |

Table 6.

| Backup Source | Duration (sec) | Source (%) | Proxy (%) | Network (%) | Target (%) | Processing Rate (MB/s) | Average Data Write Rate (MB/s) |

| CentOS 7 | 122 | 99 | 10 | 5 | 0 | 88 | 8 |

| Windows 10 | 244 | 99 | 11 | 5 | 1 | 93 | 32 |

Note: The Average Data Write Rates are calculated by Data Transferred divided by Duration. These rates represent the workloads of the Ceph cluster in these backup jobs.

Conclusions

According to the test results, Ceph RBD and CephFS have similar performance. This meets our experience regarding the benchmark of RBD and CephFS performance. Comparing the characteristics of CephFS and RBD, they have their advantages and disadvantages. If you need to deploy multiple repository servers, you have to create an RBD image for each backup repository server as you can only mount Ceph RBD on one host. Compared to CephFS, using RBD is simpler as it does not need the metadata servers. We have to assign the RBD capacity size when created, so you have to resize its capacity when you need more space.

If you use CephFS as the repository, you have to deploy at least one metadata server (MDS) in the Ceph cluster. We also need a standby metadata server for high availability. Compared to the Ceph RBD, you don’t need to give the file system a quota. So, you can treat the CephFS as an unlimited storage pool.

In this use case demonstration, our tests backup only one VM in each backup job. According to the above test reports, we know the average data writing rate is related to the processing rate and data deduplication and compression efficiency. A faster source disk reduces the backup job duration and results in a faster processing rate. Depending on users’ infrastructure, users can deploy several concurrent jobs to back up different objects simultaneously. Ceph storage performs very good at supporting multiple concurrent jobs.

A 20x HDD OSD Ceph cluster powered by 3x Ambedded Mars 400 can offer up to 700MB/s aggregated writing throughput to the 4+2 erasure code pool. Deploying multiple current backup jobs gets the benefit of reducing the overall backup duration. The maximum performance of a Ceph cluster is almost linearly proportional to the total number of disk drives in the cluster.

In this use case, we didn’t test using S3 object storage as the backup repository. S3 object storage can be used as the capacity tier in the Veeam Scale-Out backup repository and target archive repository for NAS backup. You can easily set up a RADOS gateway and create object storage users easily using the Ambedded UVS manager, the ceph management web GUI.

- Download

Use Ceph as the repository for Veeam Backup & Replication white paper

How to set up the Ceph RBD block device and the CephFS file system as the backup repository of Veeam for backing up virtual machines and files

Download- Related Products

Mars 400PRO Ceph storage appliance

Mars 400PRO

Mars 400 Ceph Appliance is designed to meet high capacity cloud-native data storage needs. It utilizes HDD to benefit from low cost per TB. Mars 400 provides...

Details