Ceph Block Storage

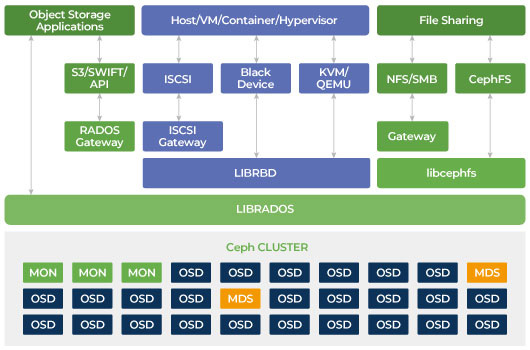

Ambedded Ceph appliance is a scalable storage platform that enables you to get high available block devices with disaster recovery and snapshot capabilities.

Ceph block devices are thin provisioned, resizable, and high data durability.

Ceph’s block devices deliver high performance with infinite scalability. Linux based systems can take the advantage of the kernel module that provides the best performance between the clients and cluster. You can easily manage and configure the block device images through the Ambedded Ceph management user interface UVS manager.

Ceph Block Device Features by using Mars 400 Appliance

- Thin provisioning: Efficiently utilize storage space.

- Resizable

- Exclusive lock: Used for virtualization to prevent multiple processes from accessing the same Rados Block Device (RBD) in an uncoordinated fashion.

- Client-side cache: Persistent write-back and persistent read-only cache

- Quality of Service: Limit the client R/W IOPS, throughput

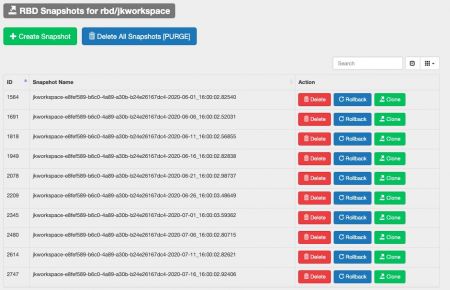

- Snapshot & rollback: Create snapshots of the images to retain a history of an image’s state. You can roll back the image to its previous versions.

- Image Clone: allows you to clone images (e.g., a VM image) quickly

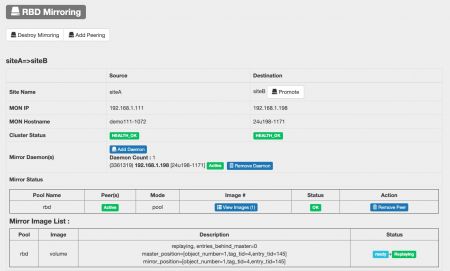

- Remote mirroring: Journal-based and snapshot-based block device replication

- 3rd party Integration: QEMU, libvirt, OpenStack, Kubernetes, CloudStack, iSCSI

The above features could be applied by using Mars 400 ceph storage appliance, with UVS manager.

Block Storage Primary Use Cases

- Virtual machine volumes

- Kubernetes/OpenStack

- Database

- Scale-out SAN

Manage RBD & Mirroring by UVS manager

Configuring Backend Pools

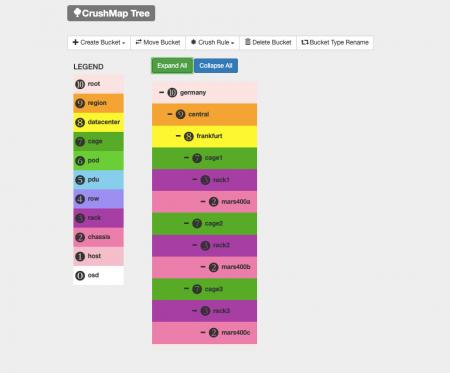

Block devices use the Ceph RADOS pool as the backend. You can use the erasure code or replica pool. You can also determine if you want to compress the data or not.

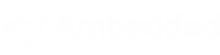

Create ceph replicated pool with UVS manager, to define the replica size, PG number, application for block, crush rule applied, quote and compression enable or not.

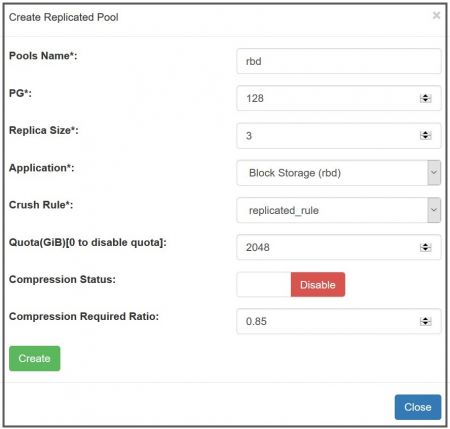

Create ceph erasure coded pool with UVS manager, to define the erasure profile, PG number, application for block, crush rule, quote and compression enable or not.

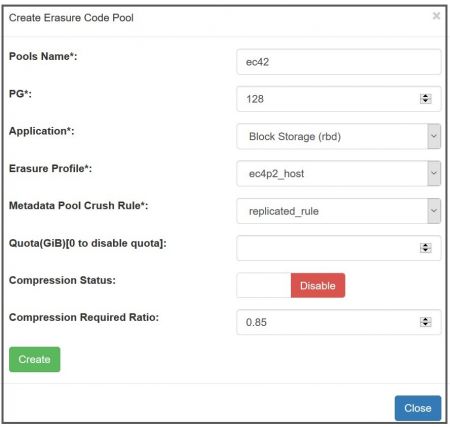

Manage Images

Image Snapshot/Clone/Flatten

RBD Mirroring

RBD mirroring replicates appointed images or all images in pools from the local Ceph cluster to another Ceph cluster at another site. If the primary site has an outage, you can resume your service by promoting the no-primary cluster. Use RBD mirroring function in UVS manager, which allow you to define 2 Ceph cluster to run RBD mirroring and define the primary and secondary Ceph cluster, to have mirroring from pool to pool, from image to image.

- Create and Manage RBD Images with Ceph Dashboard

Ambedded Ceph & SUSE Enterprise Storage Appliance supports users to manage Ceph with both Ambedded UVS Manager and Ceph Dashboard. The UVS manager provides more features of deployment and Ceph Dashboard provides more detail configuration features. In this video, you can learn how to use the Ceph dashboard to manage the block devices.

- Related Products

Mars 400PRO Ceph storage appliance

Mars 400PRO

Mars 400 Ceph Appliance is designed to meet high capacity cloud-native data storage needs. It utilizes HDD to benefit from low cost per TB. Mars 400 provides...

DetailsCeph Management Web User Interface (UVS Manager)

Unified Virtual Storage (UVS) is a software package that integrates Linux operating system, Ceph distributed storage software, management console, and the web-based...

Details